TL;DR: Ditail offers a training-free method for novel image generations and fine-grained manipulations of content/style, enabling flexible integrations of existing pre-trained Diffusion models and LoRAs.

Abstract

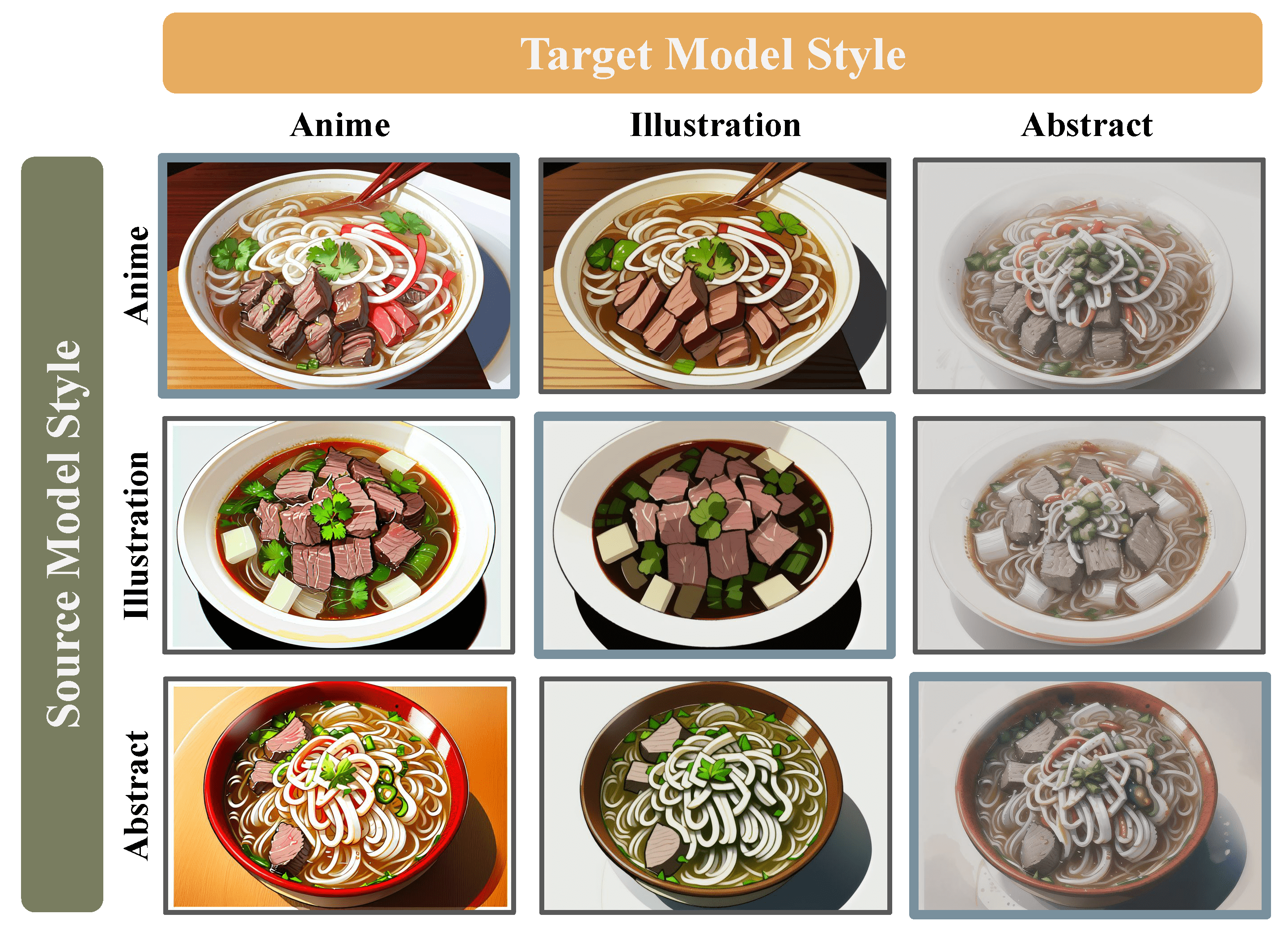

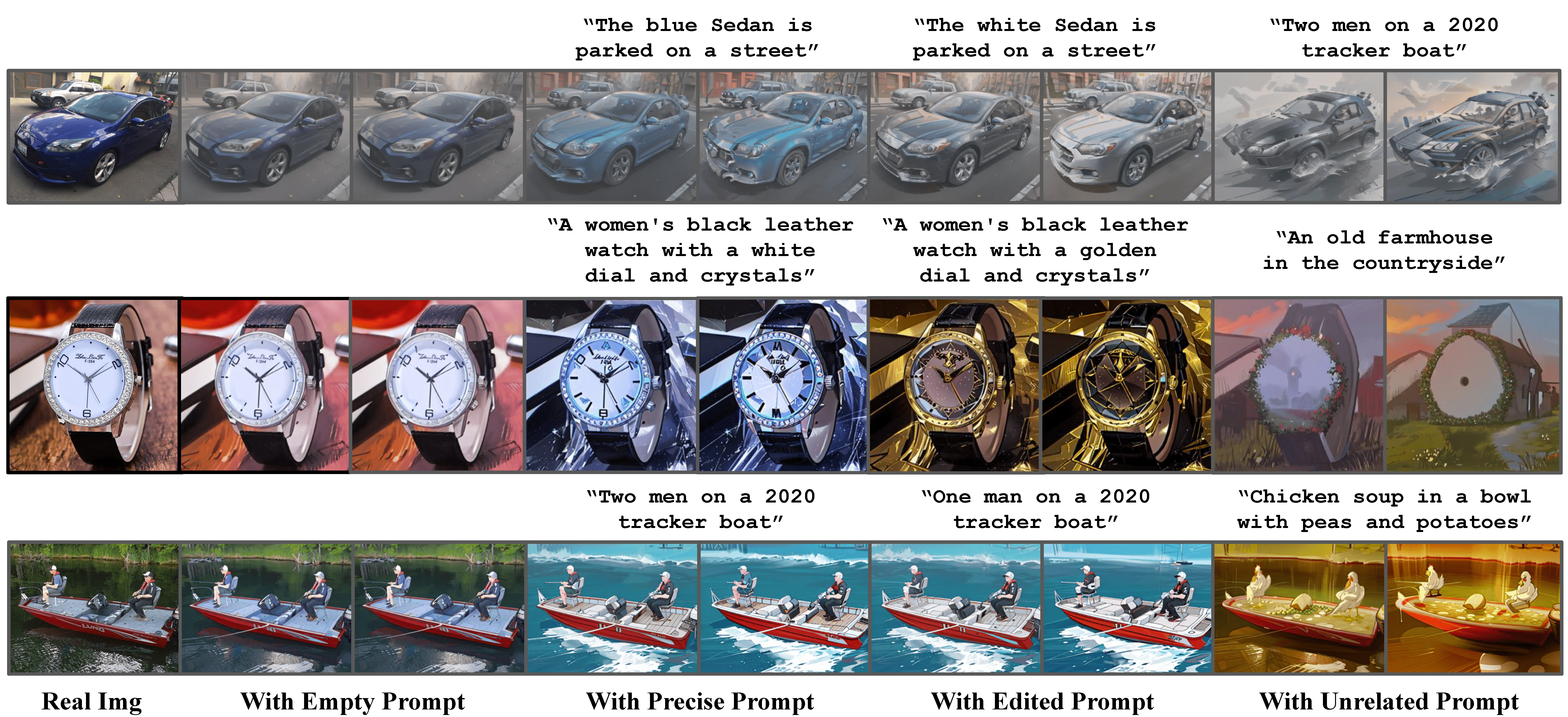

Diffusion models excel at generating high-quality images and are easy to extend, making them extremely popular among active users who have created an extensive collection of diffusion models with various styles by fine-tuning base models such as Stable Diffusion. Recent work has focused on uncovering semantic and visual information encoded in various components of a diffusion model, enabling better generation quality and more fine-grained control. However, those methods target improving a single model and overlook the vastly available collection of fine-tuned diffusion models. In this work, we study the combinations of diffusion models. We propose Diffusion Cocktail (Ditail), a training-free method that can accurately transfer content information between two diffusion models. This allows us to perform diverse generations using a set of diffusion models, resulting in novel images that are unlikely to be obtained by a single model alone. We also explore utilizing Ditail for style transfer, with the target style set by a diffusion model instead of an image. Ditail offers a more detailed manipulation of the diffusion generation, thereby enabling the vast community to integrate various styles and contents seamlessly and generate any content of any style.

Paper: https://arxiv.org/abs/2312.08873

Code: https://github.com/MAPS-research/Ditail

Demo: https://huggingface.co/spaces/MAPS-research/Diffusion-Cocktail

Project Page: https://maps-research.github.io/Ditail/

Thank you for sharing! I’ve had these ideas for edits to pictures but could never get an AI to make it right from scratch. Giving it a try myself produced exactly what I was thinking of!