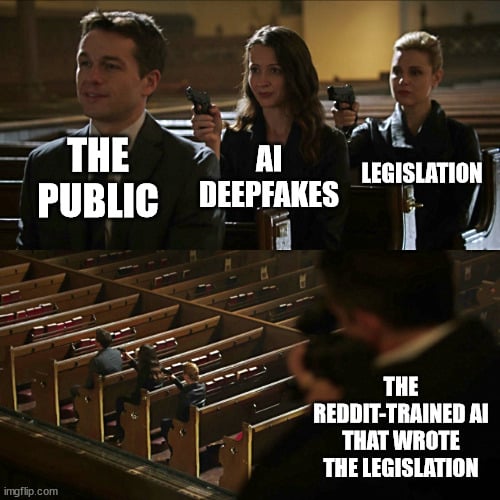

Shall we trust LM defining legal definitions, deepfake in this case? It seems the state rep. is unable to proof read the model output as he is “really struggling with the technical aspects of how to define what a deepfake was.”

The Speznasz.

The stupid. It hurts.

These types of things are exactly what Generative AI models are good for, as much as Internet people don’t want to hear it.

Things that are massively repeatable based off previous versions (like legislation, contracts, etc) are pretty much perfect for it. These are just tools for already competent people. So in theory you have GenAI crank out the boring stuff and have an expert “fill in the blanks” so to speak

Someone should run all lawyer books through Chat-GPT so we can have a free opensource lawyer in our phones.

During a traffic stop: “Hold on officer, I gotta ask my lawyer. It says to shut the hell up.”

Cop still shoots him in the head so he can learn his lesson. He pulled out his phone!

Or lawyer-bot cites some sovereign citizen crap as if it were established legal precedent. “You can’t prosecute me in this court! Your flag has a gold fringe on it!”

A new meme I expect to take hold is how tempting ChatGPT is. And how the temptation will only grow as LLMs and similar get better, and as our externalized knowledge habits change.

🙊 and the group think nonsense continues…

Y’all know those grammar checking thingies? Yeah, same basic thing. You know when you’re stuck writing something and your wording isn’t quite what you’d like? Maybe you ask another person for ideas; same thing.

Is it smart to ask AI to write something outright; about as smart as asking a random person on the street to do the same. Is it smart to use proprietary AI that has ulterior political motives; things might leak, like this, by proxy. Is it smart for people to ask others to proof read their work? Does it matter if that person is a grammar checker that makes suggestions for alternate wording and has most accessible human written language at its disposal.

The problem is that tools use to detect AI writing are not accurate. At the end of the day as long as the information is worded correct and the information is correct that’s that matters. When you have AI write an argument to cases that don’t exist as a defense lawyer… that’s when theirs problems

This chud uploaded potentially sensitive information to a public service. People really need education on how to intelligently use these services.

This chud uploaded potentially sensitive information to a public service.

A bill draft, which eventually/maybe gets signed and is public by its very nature is sensitive?

People really need education on how to intelligently use these services.

Agreed on principle, but I don’t see how what he did was wrong…other than calling ChatGPT a subject matter expert.