Hospital bosses love AI. Doctors and nurses are worried.::undefined

4th year medical student. AI is not ready to be making any diagnostic or therapeutic decisions. What I do think we’re just about ready for is simply making notes faster to write. Discharge summaries especially, could be the first real step AI takes into healthcare. For those unaware, a discharge summary is a chronological description of all the major events in a patient’s hospitalization that explain why they presented, how they were diagnosed, any complications that arose, and how they were treated. They are just summaries of all of the previous daily notes that were written by the patient’s doctors. An AI could feasibly only pull data from these notes, rephrasing for clarity and succinctness, and save doctors 10-20 minutes of writing on every discharge they do.

This is how most of the tech industry thinks – looking at the existing process and trying to see which parts can be automated – but I’d argue that it’s actually not that great of a framework for finding good uses for technology. It’s an artifact of a VC-funded industry, which sees technology primarily as a way to save costs on labor.

In this particular case, I do think LLMs would be great at lowering labor costs associated with writing summaries, but you’d end up with a lot of cluttered, mediocre summaries clogging up your notes, just like all the other bloatware that most of our jobs now force us to deal with.

Here is a gift link if anyone wants the full article without paywall. https://wapo.st/3YI6R41

This is a bad idea. Used to do call centre customer service, and while it wasn’t implemented on our side, some contacts that got routed to us seems to be handled by chatbots before, and people aren’t happy.

Human condition is complex, organic, often unique circumstances. A brain dead statistical machine like AI cannot be expected to handle these things well.

Now, if it is for health related big data analytics, like epidemic modeling, demographic changes, effect of dietary patterns (notoriously hard to model actually), then I don’t see anything wrong with that. Caring for people? No way.

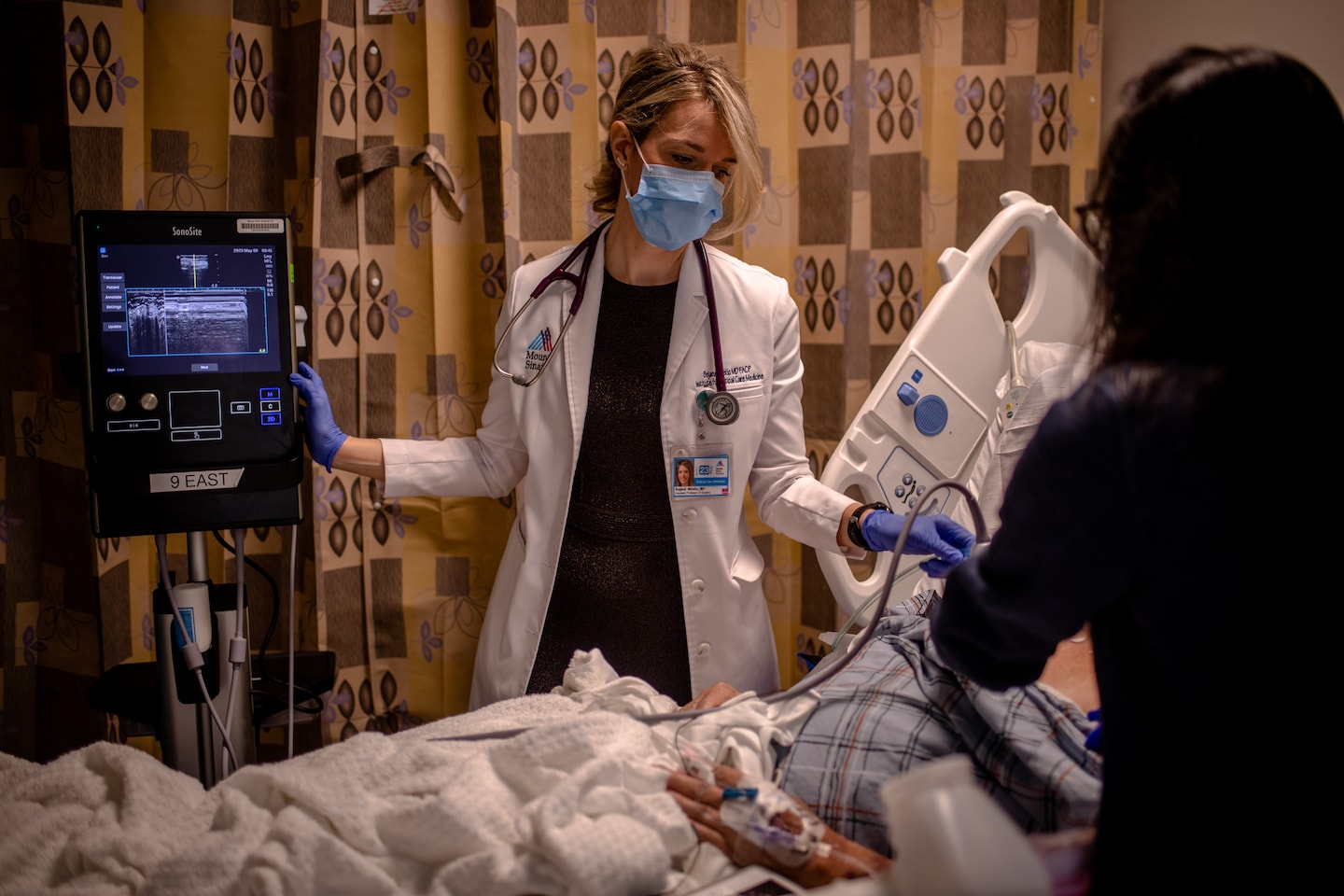

It’s not going to be about patient interaction, at least not at first. This is about EMR-intrgrated analytics and diagnostic tools intended to help streamline the workflows of overworked doctors and nurses and to identify patterns that humans may miss.

Good, doctors should be worried. Because if it isn’t a textbook issue you have, they won’t search any further and tell you with their heads up high “live with it”. And they got it wrong half the time too. Pretty much what AI would do. Characters like dr.House are a myth.

Nurses should not be worried. Because it is them who take the imaging, put on bandages and clean up.

I welcome AI if it means shutting up ivory tower doctors.

All the power to nurses!