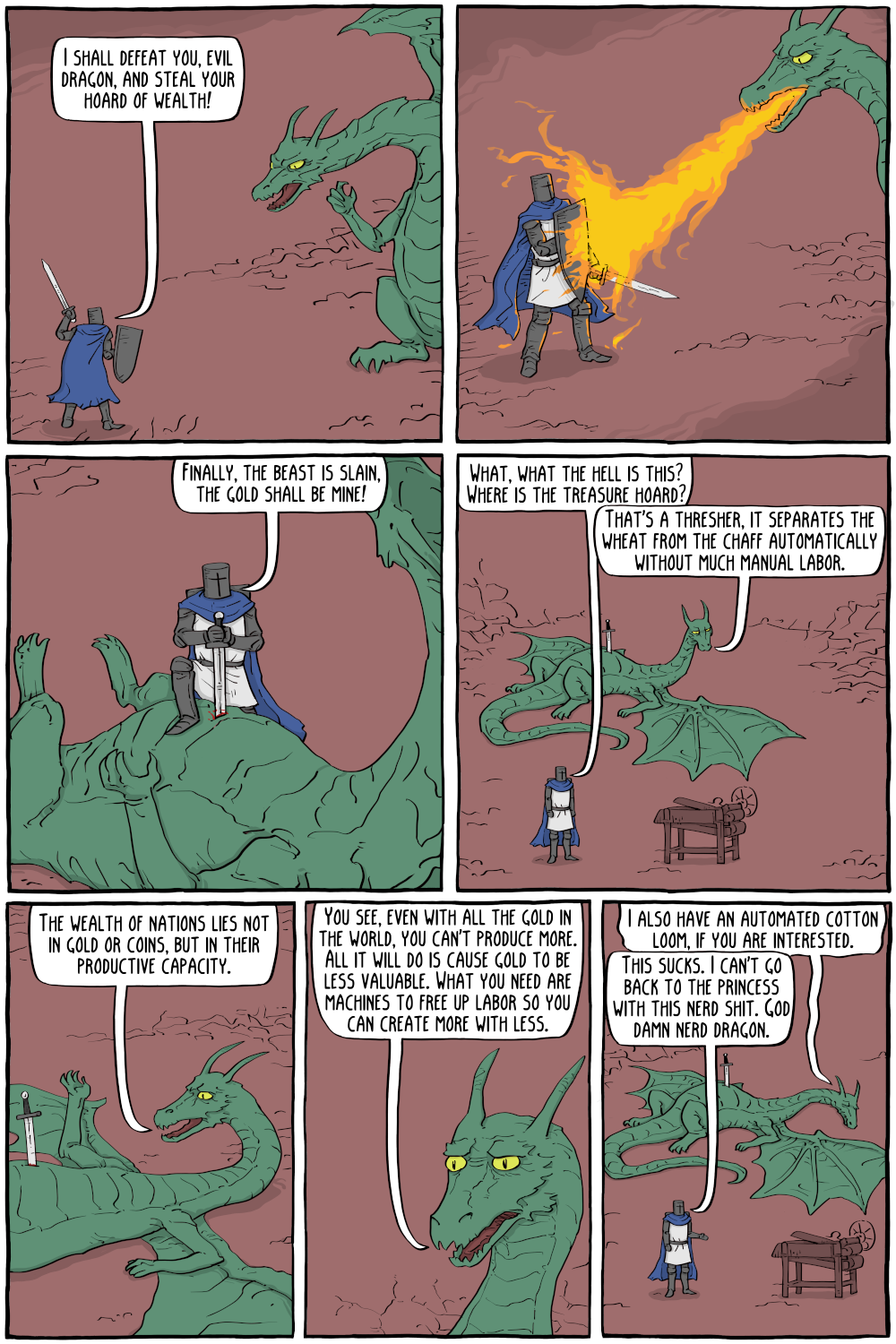

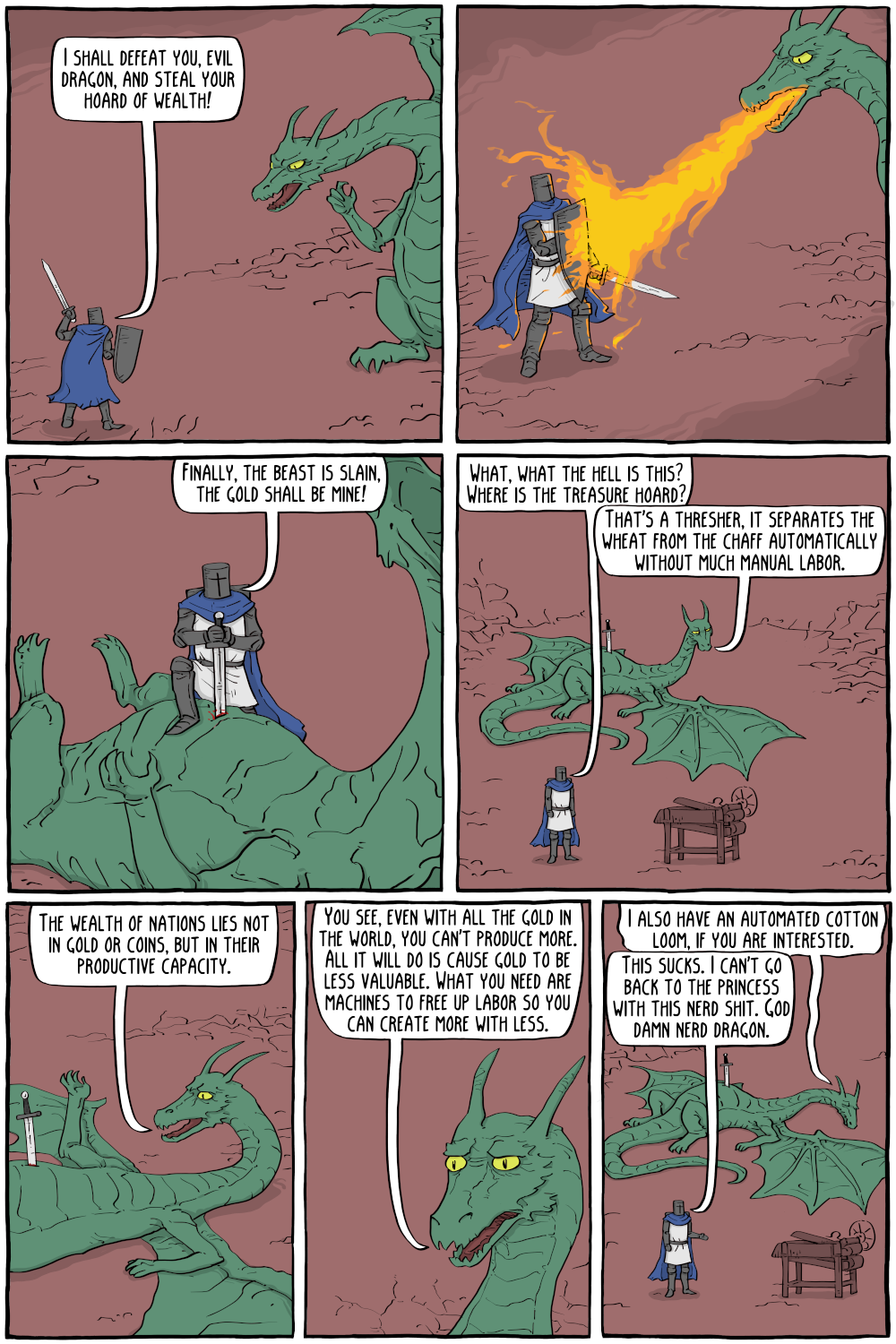

Automating jobs away is a good thing

I remember seeing someone post this comic a while back, thought it was a pithy explanation.

https://static.existentialcomics.com/comics/TheWealthofDragons.png

Automating jobs away is a good thing

I remember seeing someone post this comic a while back, thought it was a pithy explanation.

https://static.existentialcomics.com/comics/TheWealthofDragons.png

If you’re interested in home automation, I think that there’s a reasonable argument for running it on separate hardware. Not much by way of hardware requirements, but you don’t want to take it down, especially if it’s doing things like lighting control.

Same sort of idea for some data-logging systems, like weather stations or ADS-B receivers.

Other than that, though, I’d probably avoid running an extra system just because I have hardware. More power usage, heat, and maintenance.

EDIT: Maybe hook it up to a power management device, if you don’t have that set up, so that you can power-cycle your other hardware remotely.

I mean, some of those EOLed nearly a decade ago.

You can argue over what a reasonable EOL is, but all hardware is going to EOL at some point, and at that point, it isn’t going to keep getting updates.

Throw enough money at a vendor, and I’m sure that you can get extended support contracts that will keep it going for however long people are willing to keep chucking money at a vendor – some businesses pay for support on truly ancient hardware – but this is a consumer broadband router. It’s unlikely to make a lot of sense to do so on this – the hardware isn’t worth much, nor is it going to be terribly expensive to replace, and especially if you’re using the wireless functionality, you probably want support for newer WiFi standards anyway that updated hardware will bring.

I do think that there’s maybe a good argument that EOLing hardware should be handled in a better way. Like, maybe hardware should ship with an EOL sticker, so that someone can glance at hardware and see if it’s “expired”. Or maybe network hardware should have some sort of way of reporting EOL in response to a network query, so that someone can audit a network for EOLed hardware.

But EOLing hardware is gonna happen.

which of course doesn’t then require you to pay monthly to actually use the stupid thing.

I think the idea here is that the businesses can lay off some of their in-house IT staff and pay Microsoft a lesser amount instead; the in-house IT staff does get paid monthly.

The Link device is designed to be a compact, fanless, and easy-to-use cloud PC for your local monitors and peripherals. It’s meant to be the ideal companion to Microsoft’s Windows 365 service, which lets businesses transition employees over to virtual machines that exist in the cloud and can be streamed securely to multiple devices.

It sounds like it’s part of a broader strategy to have companies outsource their IT to Microsoft.

I assume that early access games are in the running when they exit EA. Presumably, that’s when they’re at their strongest. Doesn’t seem to me like it’d be fair to treat them as being entered when they enter EA, as they aren’t fully developed yet.

If they’re removable, could just put actual floppy drives in.

EDIT: Oh, they’re flip-down drawer covers rather than fixed panels. Well, still might be removable.

It looks like the $75/mo rate is for their “premium” package. Their standard one is $39/mo.

It looks like that’s on the high side, but not radically so compared to typical American newspapers.

https://www.thepricer.org/newspaper-subscriptions-cost/

Digital Pass Subscriptions

Local/regional papers – $10 to $30 per month

Example: Seattle Times Digital – $7.99 per month

National publications – $15 to $50 per month

Example: The Washington Post Digital – $39.99 per month

Also, note that FT is British, not American.

Looks fine to me.

Little side question: Will the Wi-Fi and Bluetooth on the motherboard work in Arch? From what I could gather, the drivers for it should be in the latest kernel, but I’m not 100% sure.

If they don’t for some reason and you can’t get it working or need some sort of driver fix, can always worst case fall back to a USB dongle or similar until they do. Obviously, preferable not to do that, but shouldn’t wind up stuck without them no matter what.

I mean, they’ve done this when places charge them money to index the news articles there.

It hardly seems reasonable to both mandate that they index a given piece of news media and that they pay a fee to do so.

Alexey Pajitnov, who created the ubiquitous game in 1984, opens up about his failed projects and his desire to design another hit.

He prefers conversations about his canceled and ignored games, the past designs that now make him cringe, and the reality that his life’s signature achievement probably came decades ago.

The problem is that that guy created what is probably the biggest, most timeless simple video game in history. Your chances of repeating that are really low.

It’s like you discover fire at 21. The chances of doing it again? Not high. You could maybe do other successful things, but it’d be nearly impossible to do something as big again.

The downside of building the phone/tablet into the car, though, is that phones change more quickly than cars.

A 20 year old car can be perfectly functional. A 20 year old smarphone is insanely outdated. If the phone is built into the car, you’re stuck with it.

Relative to a built-in system, I’d kind of rather just have a standard mounting point with security attachments and have the car computer be upgraded. 3DIN maybe.

I get the “phone is small” argument, but the phone is upgradeable.

And I’d definitely rather have physical controls for a lot of things.

Plus, even if you manage to never, ever have a drive fail, accidentally delete something that you wanted to keep, inadvertently screw up a filesystem, crash into a corruption bug, have malware destroy stuff, make an error in writing it a script causing it to wipe data, just realize that an old version of something you overwrote was still something you wanted, or run into any of the other ways in which you could lose data…

You gain the peace of mind of knowing that your data isn’t a single point of failure away from being gone. I remember some pucker-inducing moments before I ran backups. Even aside from not losing data on a number of occasions, I could sleep a lot more comfortably on the times that weren’t those occasions.

That’s not a completely reliable fix, a third party library could still call setenv and trigger crashes, there’s still a risk of data races, but we’ve observed a significant reduction in SIGABRT volumes.

Hmm. If they want a dirty hack, I expect they could do a library interposer that overrides setenv(3) and getenv(3) symbols with versions that grab a global “environment variable” lock before calling the actual function.

They say that they’re having problems with third party libraries that use environment variables. If they’re using third-party libraries statically-linked against libc, I suppose that won’t work, but as long as they’re dynamically-linked, should be okay.

EDIT: Though you’ve still got an atomic update problem with the returned buffer, doing things the way they are, if you don’t want to leak memory. Like, one thread might have half-updated the value of the buffer when another is reading the buffer after returning from the interposer’s version of the function. That shouldn’t directly crash, but you can get a mangled environment variable value. And there’s not going to be guarantees on synchronization on access to the buffer, unlike the getenv() call itself.

thinks

This is more of a mind-game solution, but…

Well, you can’t track lifetime of pointers to a buffer. So there’s no true fix that doesn’t leak memory. Because the only absolute fix is to return a new buffer from getenv() for each unique setenv(), because POSIX provides no lifetime bounds.

But if you assume that anything midway through a buffer read is probably going to do so pretty soon, which is probably true…

You can maybe play tricks with mmap() and mremap(), if you’re willing to blow a page per environment variable that you want to update and a page of virtual address space per update, and some temporary memory. The buffer you return from the interposer’s getenv() is an mmap()ed range. In the interposer’s setenv(), if the value is modified, you mremap() with MREMAP_DONTUNMAP. Future calls to getenv() return the new address. That gives you a userspace page fault handler to the old range, which I suppose – haven’t written userspace page fault handlers myself – can probably block the memory read until the new value is visible and synchronize on visibility of changes across threads.

If you assume that any read of the buffer is sequential and moving forward, then if a page fault triggers on an attempted access at the address at the start of the page, then you can return the latest value of the value.

If you get a fault via an address into the middle of the buffer, and you still have a copy of the old value, then you’ve smacked into code in the middle of reading the buffer. Return the old value.

A given amount of time after an update, you’re free to purge old values from setenv(). Can do so out of the interposer’s functions.

You can never eliminate that chance that a thread has read the first N bytes of an environment variable buffer, then gone to sleep for ten minutes, then suddenly wants the remainder. In that case, you have to permit for the possibility that the thread sees part of the old environment variable value and part of the new. But you can expend temporary memory to remember old values longer to make that ever-more unlikely.

When the market is flooded, any paid title has an incredibly difficult time standing out.

If that’s true, that it’s simply an inability to find premium games, but demand exists, that seems like the kind of thing where you could address it via branding. That is, you make a “premium publisher” or studio or something that keeps pumping out premium titles and builds a reputation. I mean, there are lots of product categories where you have brands develop – it’s not like you normally have some competitive market with lots of entrants, prices get driven down, and then brands never emerge. And I can’t think of a reason for phone apps to be unique in that regard.

I think that there’s more to it than that.

My own guesses are:

I won’t buy any apps from Google, because I refuse to have a Google account on my phone, because I don’t want to be building a profile for Google. I use stuff from F-Droid. That’s not due to unwillingness to pay for games – I buy many games on other platforms – but simply due to concerns over data privacy. I don’t know how widespread of a position that is, and it’s probably not the dominant factor. But my guess is that if I do it, at least a few other people do, and that’s a pretty difficult barrier to overcome for a commercial game vendor.

Platform demographics. My impression is that it may be that people playing on a phone might have less disposable income than a typical console player (who bought a piece of hardware for the sole and explicit purpose of playing games) or a computer player (a “gaming rig” being seen as a higher-end option to some extent today). If you’re aiming at value consumers, you need to compete on price more strongly.

This is exacerbated by the fact that a mobile game is probably a partial subsititute good for a game on another platform.

In microeconomics, substitute goods are two goods that can be used for the same purpose by consumers.[1] That is, a consumer perceives both goods as similar or comparable, so that having more of one good causes the consumer to desire less of the other good. Contrary to complementary goods and independent goods, substitute goods may replace each other in use due to changing economic conditions.[2] An example of substitute goods is Coca-Cola and Pepsi; the interchangeable aspect of these goods is due to the similarity of the purpose they serve, i.e. fulfilling customers’ desire for a soft drink. These types of substitutes can be referred to as close substitutes.[3]

They aren’t perfect substitutes. Phones are very portable, and so you can’t lug a console or even a laptop with you the way you can a phone and just slip it out of your pocket while waiting in a line. But to some degree, I think for most people, you can choose to game on one or the other, if you’ve multiple of those platforms available.

So, if you figure that in many cases, people who have the option to play a game on any of those platforms are going to choose a non-mobile platform if that’s accessible to them, the people who are playing a game on mobile might tend to be only the people who have a phone as the only available platform, and so it might just be that they’re willing to spend less money. Like, my understanding is that it’s pretty common to get kids smartphones these days…but to some degree, that “replaces” having a computer. So if you’ve got a bunch of kids in school using phones as their gaming platform, or maybe folks who don’t have a lot of cash floating around, they’re probably gonna have a more-limited budget to expend on games, be more price-sensitive.

kagis

https://www.pewresearch.org/internet/fact-sheet/mobile/

Smartphone dependency over time

Today, 15% of U.S. adults are “smartphone-only” internet users – meaning they own a smartphone, but do not have home broadband service.

Reliance on smartphones for online access is especially common among Americans with lower household incomes and those with lower levels of formal education.

I think that for a majority of game genres, the hardware limitations of the smartphone are pretty substantial. It’s got a small screen. It’s got inputs that typically involve covering up part of the screen with fingers. The inputs aren’t terribly precise (yes, you can use a Bluetooth input device, but for many people, part of the point of a mobile platform is that you can have it everywhere, and lugging a game controller around is a lot more awkward). The hardware has to be pretty low power, so limited compute power. Especially for Android, the hardware differs a fair deal, so the developer can’t rely on certain hardware being there, as on consoles. Lot of GPU variation. Screen resolutions vary wildly, and games have to be able to adapt to that. It does have the ability to use gestures, and there are some games that can make use of GPS hardware and the like, but I think that taken as a whole, games tend to be a lot more disadvantaged by the cons than advantaged by the pros of mobile hardware.

Environment. While one can sit down on a couch in a living room and play a mobile game the way one might a console game, I think that many people playing mobile games have environmental constraints that a developer has to deal with. Yes, you can use a phone while waiting in line at the grocery store. But the flip side is that that game also has to be amenable to maybe just being played for a few minutes in a burst. You can’t expect the player to build up much mental context. They may-or-may-not be able to expect a player to be listening to sound. Playing Stellaris or something like that is not going to be very friendly to short bursts.

Battery power. Even if you can run a game on a phone, heavyweight games are going to drain battery at a pretty good clip. You can do that, but then the user’s either going to have to limit playtime or have a source of power.

Assuming that this is the episode and the Factorio dev post that references, I think that that’s a different issue. That dev also was using Sway under Wayland, but was talking about how Factorio apparently doesn’t immediately update the drawable area on window size change – it takes three frames, and Sway was making this very visible.

I use the Sway window manager, and a particularity of this window manager is that it will automatically resize floating windows to the size of their last submitted frame. This has unveiled an issue with our graphics stack: it takes the game three frames to properly respond to a window resize. The result is a rapid tug-of-war, with Sway sending a ton of resize events and Factorio responding with outdated framebuffer sizes, causing the chaos captured above.

I spent two full days staring at our graphics code but could not come up with an explanation as to why this is happening, so this work is still ongoing. Since this issue only happens when running the game on Wayland under Sway, it’s not a large priority, but it was too entertaining not to share.

I’d guess that he’s maybe using double- or triple-buffering at the SDL level or something like that.

The Jia Tan xz backdoor attack did get flagged by some automated analysis tools – they had to get the analysis tools modified so that it would pass – and that was a pretty sophisticated attack. The people running the testing didn’t catch it, trusted the Jia Tan group that it was a false positive that needed to be fixed, but it was still putting up warning lights.

More sophisticated attackers will probably replicate their own code analysis environments mirroring those they know of online, make a checklist of running what code analysis tools they can run against locally prior to making the code visible, tweak it until it passes – but I think that it definitely raises the bar.

Could have some analysis tools that aren’t made public but run against important public code repositories specifically to try to make this more difficult.

I don’t think that that’s a counter to the specific attack described in the article:

The malicious packages have names that are similar to legitimate ones for the Puppeteer and Bignum.js code libraries and for various libraries for working with cryptocurrency.

That’d be a counter if you have some known-good version of a package and are worried about updates containing malicious software.

But in the described attack, they’re not trying to push malicious software into legitimate packages. They’re hoping that a dev will accidentally use the wrong package (which presumably is malicious from the get-go).

I mean, this kind of stuff was going to happen.

The more-important and more-widely-used open source software is, the more appealing supply-chain attacks against it are.

The world where it doesn’t happen is one where open source doesn’t become successful.

I expect that we’ll find ways to mitigate stuff like this. Run a lot more software in isolation, have automated checking stuff, make more use of developer reputation, have automated code analysis, have better ways to monitor system changes, have some kind of “trust metric” on packages.

Go back to the 1990s, and most everything I sent online was unencrypted. In 2024, most traffic I send is encrypted. I imagine that changes can be made here too.

There are a lot of ways to measure that.

I guess one reasonable metric is how long I probably played it. Close Combat II: A Bridge Too Far and an old computer pinball game, Loony Labyrinth probably rank pretty highly.

Another might be how long after its development it’s still considered reasonably playable. I’d guess that maybe something like Tetris or Pac-Man might rate well there.

Another might be how influential the game is. I think that “genre-defining” games like Wolfenstein 3D would probably win there.

Another might be how impressed I was with a game at the time of release. Games that made major technical or gameplay leaps would rank well there. Maybe Wolfenstein 3D or Myst.

Another might be what the games I play today are – at least once having played them sufficiently to become familiar with them – since presumably I could play pretty much any game out there, and so my choice, if made rationally, should identify the best options for me that I’m aware of. That won’t work for every sort of genre, as it requires replayability – an adventure game where experiencing the story one time through is kind of the point would fall down here – but I think that it’s a decent test of the library of games out there. Recently I’ve played Steel Division II singleplayer, Carrier Command 2 singleplayer, Cataclysm: Dark Days Ahead, and Shattered Pixel Dungeon. RimWorld and Oxygen Not Included tend to be in the recurring cycle.