Aren’t these sizes a marketing gimmick anyway? They used to mean the gate size of a transistor, but I don’t think that’s been the case for a few years now.

They’re generally consistent within a single manufacturer’s product lines; however, you absolutely cannot compare them between manufacturers because the definitions are completely different.

And that’s what benchmarks are for

It refers to feature size, rather than component.

No, it still means what it always has, and each step still introduces good gains.

It’s just that each step is getting smaller and MUCH more difficult and we still aren’t entirely sure what to do after we get to 1. In the past we were able to go from 65nm in 2006 to 45 in 2008. We had 7nm in 2020, but in that same 2 year time frame we are only able to get to 5nm

And now we’ve reached the need for decimal steps with this 1.6.

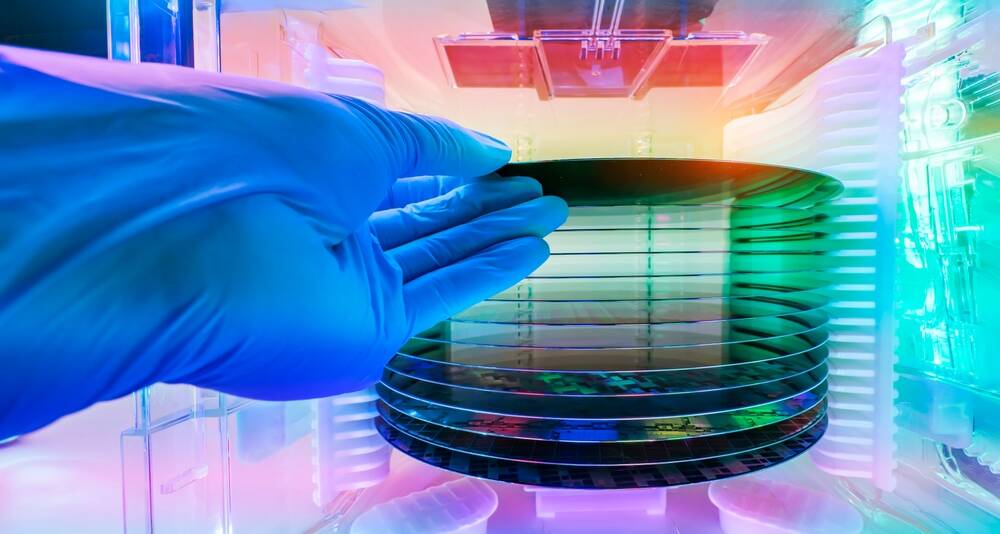

Later each new generation process became known as a technology node[17] or process node,[18][19] designated by the process’ minimum feature size in nanometers (or historically micrometers) of the process’s transistor gate length, such as the “90 nm process”. However, this has not been the case since 1994,[20] and the number of nanometers used to name process nodes (see the International Technology Roadmap for Semiconductors) has become more of a marketing term that has no standardized relation with functional feature sizes or with transistor density (number of transistors per unit area).[21]

https://en.wikipedia.org/wiki/Semiconductor_device_fabrication#Feature_size

personally, I don’t care they try to simplify these extremely complicated chip layouts, but keep calling it X nanometers when there’s nothing of that feature size is just plain misleading.

yes, boss, I have always worked 42 hours per week… Huh? No, what do you mean “actual hours”?

So they found a way to inscribe more arcane runes onto the mystic rock thus increasing its mana capacity?

Can’t wait for Python on top of webassembly on top of react on top of electron Frameworks to void that advancement

The more efficient the machines, the less efficient the code, such is the way od life

I’m wondering how much further size reductions in lithography technology can take us before we need to find new exotic materials or radically different CPU concepts.

We’ve already been doing radically different design concepts, chiplets being a massive one that jumps to mind.

But also things like specialised hardware accelerators, 3D stacking, or the upcoming backside power delivery tech.

I mean, chiplets are neat…but they are just the same old CPU, just in lego. and thats mostly just to increase yields vs monolothic designs. Same with accelerators, stacking, etc.

I mean radical new alien designs (Like quantum CPUs as an example) since we have to be reaching the limit of what silicon and lithography can do.

Photonic is the game changer. Putting little LEDs on chips and making those terabyte per second interconnects with low heat and long range and better signal integrity

This is what I’m talking about. This kind of weird, new shit, to overcome the limits we have to be running into by now with the standard silicon and lithography that we’ve been using and evolving for 40 years.

I don’t think it is mostly just the same CPU with a slight twist. It’d be mind-blowing tech if you showed it to some electrical engineers from 20, 15, shit even 10 years ago. Chiplets were and are a big deal, and have plenty of advantages beyond yield improvements.

I also disagree with stacking not being a crazy advancement. Stacking is big, especially for memory and cache, which most chip designs are starved of (and will get worse as they don’t shrink as well)

There’s more to new, radical, chip design than switching what material they use. Chiplets were a radical change. I think you’re only not classifying them as an “alien” design as you’re now used to them. If carbon nanotube monolithic CPU designs came out a while ago, I think you’d have similarly gotten used to them and think of them as the new normal and not something entirely different.

Splitting up silicon into individual modules and being able to trivially swap out chiplets seems more alien to me than if they simply moved from silicon transistors to [material] transistors.

What’s the threshold for quantum tunneling to be an issue? Cuz at such small scales, particles can…teleport through stuff.

It’s important to note that 1.6nm is just a marketing naming scheme and has nothing to do with the actual size of the transistors.

https://en.m.wikipedia.org/wiki/3_nm_process

Look at the 3nm process and how the gate pitch is 48nm and the metal pitch is 24nm. The names of the processes stopped having to do with the size of the transistors over a decade ago. It is stupid.

Quantum tunneling has been an ongoing issue. Basically, they are using different design of transistors, different semiconductor material at the gate, and accepting that it is a reality and adding more error checks/corrections.