It’s absurd that some of the larger LLMs now use hundreds of billions of parameters (e.g. llama3.1 with 405B).

This doesn’t really seem like a smart usage of ressources if you need several of the largest GPUs available to even run one conversation.

It’s absurd that some of the larger LLMs now use hundreds of billions of parameters (e.g. llama3.1 with 405B).

This doesn’t really seem like a smart usage of ressources if you need several of the largest GPUs available to even run one conversation.

There is no way to be 100% sure, but:

I can and do self host, but I’m not willing to provide these services for free. I don’t want to be responsible for other peoples passwords or family photos.

Thats where good, privacy-respecting services come into play. Instead of hosting for my neighbours, I would recommend mailbox.org, bitwarden, ente or a hosted nextcloud.

The blog post contains an interesting tineline. Apparently, the first fix was not sufficient. So if you have updated Vaultwaren before November 18, update it again.

Copy of the timeline:

The only ‘big’ customer of thin clients I know is a hospital. I believe thin clients are well suited for highly standardized and strictly controlled workplaces.

Why do you say that no business wants this? Obviously, thin clients have been a thing for decades now. This is just another thin client, nothing more.

My HP has a 65 watt CPU built in, when it’s running at full load it is quite loud.

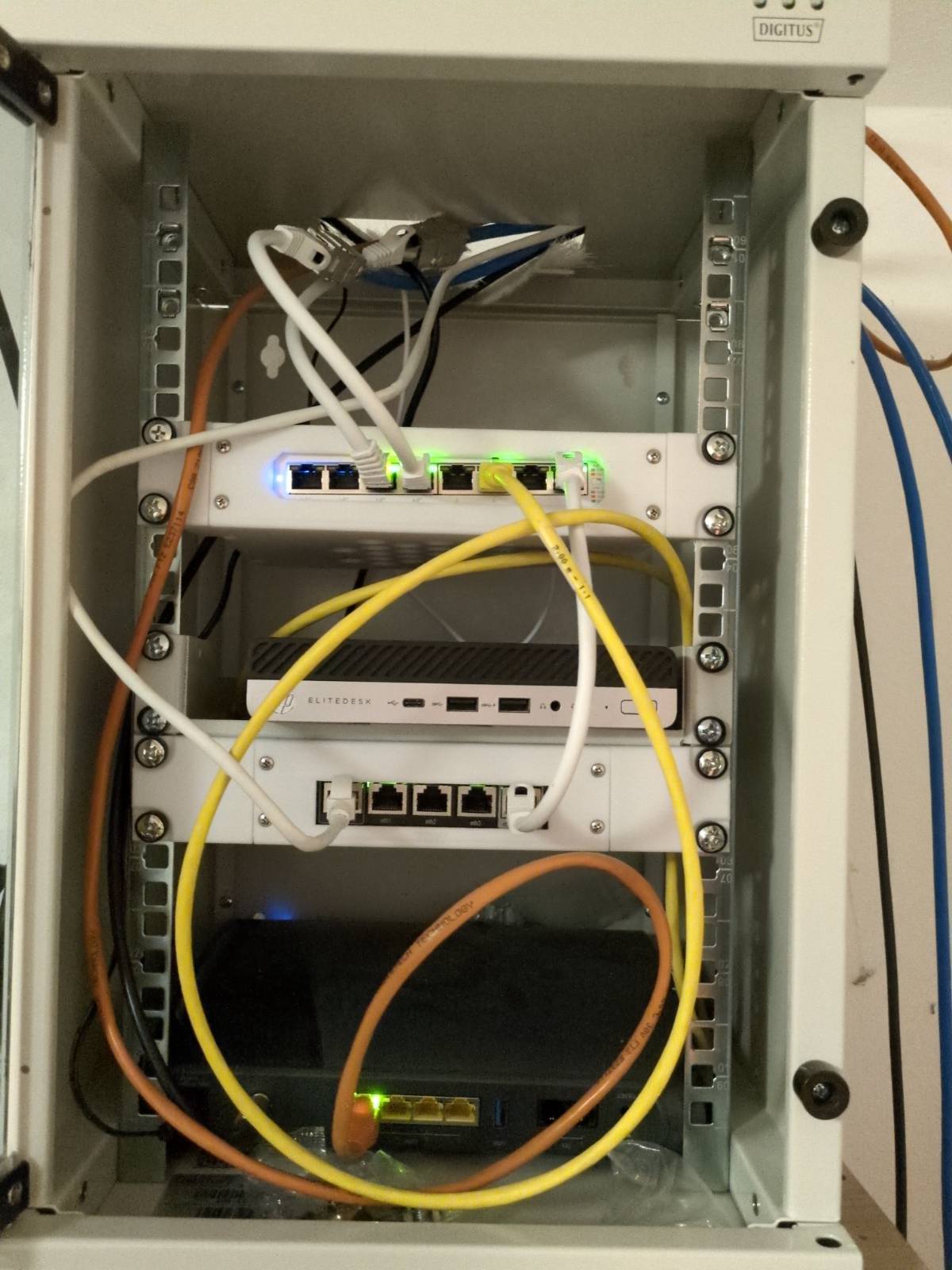

Small, 10 inch rack, with some 3D printed rack mounts.

Maybe try some TLS-based VPN? This should work almost anywhere, because it looks like a standard HTTPS connection.

Wireguard - even on port 443 - is special as it uses UDP protocol and not the more widely used TCP protocol.

I don’t think these two games can be reasonably compared. HL2 is currently free, while concord was a paid game.

I like it :) Can you provide a link to the sensors you used?

You‘re supposed to host this yourself.

Set the DNS cache time to 60 seconds.

Set the script to run on every host delayed by some time to avoid simultaneously accessing the API (e.g. run the script every other minute).

With this approach, you get automatic failover in at most 3 minutes.

I’d host it on both webservers. The script sets the A record to all the servers that are online. Obviously, the script als has to check it’s own service.

It seems a little hacky though, for a business use case I would use another approach.

OP said that they have a static website, this eliminates the need for session sync.

Your challenge is that you need a loadbalancer. By hosting the loadbalancer yourself (e.g. on a VPS), you could also host your websites directly there…

My approach would be DNS-based. You can have multiple DNS A records, and the client picks one of them. With a little script you could remove one of the A Records of that server goes down. This way, you wouldn’t need a central hardware.

No, with these reasons:

I have a VPS for these tasks, and I host a few sites for friends amd family.

AFAIK, the only reason not to use Letsencrypt are when you are not able to automate the process to change the certificate.

As the paid certificates are valid for 12 month, you have to change them less often than a letsencrypt certificate.

At work, we pay something like 30-50€ for a certificate for a year. As changing certificates costs, it is more economical to buy a certificate.

But generally, it is best to use letsencrypt when you can automate the process (e.g. with nginx).

As for the question of trust: The process of issuing certificates is done in a way that the certificate authority never has access to your private key. You don’t trust the CA with anything (except your payment data maybe).

I don’t think your brain can be reasonably compared with an LLM, just like it can’t be compared with a calculator.