Driverless cars worse at detecting children and darker-skinned pedestrians say scientists::Researchers call for tighter regulations following major age and race-based discrepancies in AI autonomous systems.

LiDAR doesn’t see skin color or age. Radar doesn’t either. Infra-red doesn’t either.

That’s a fair observation! LiDAR, radar, and infra-red systems might not directly detect skin color or age, but the point being made in the article is that there are challenges when it comes to accurately detecting darker-skinned pedestrians and children. It seems that the bias could stem from the data used to train these AI systems, which may not have enough diverse representation.

The main issue, as someone else pointed out as well, is in image detection systems only, which is what this article is primarily discussing. Lidar does have its own drawbacks, however. I wouldn’t be surprised if those systems would still not detect children as reliably. Skin color wouldn’t definitely be a consideration for it, though, as that’s not really how that tech works.

Ya hear that Elno?

I’m sick of the implication that computer programmers are intentionally or unintentionally adding racial bias to AI systems. As if a massive percentage of software developers in NA aren’t people of color. When can we have the discussion where we talk about how photosensitive technology and contrast ratio works?

There’s still a huge racial disparity in tech work forces. For one example, at Google according to their diversity report (page 66), their tech workforce is 4% Black versus 43% White and 50% Asian. Over the past 9 years (since 2014), that’s an increase from 1.5% to 4% for Black tech workers at Google.

There’s also plenty of news and research illuminating bias in trained models, from commercial facial recognition sets trained with >80% White faces to Timnit Gebru being fired from Google’s AI Ethics group for insisting on admitting bias and many more.

I also think it overlooks serious aspects of racial bias to say it’s hard. Certainly, photographic representation of a Black face is going to provide less contrast within the face than for lighter skin. But that’s also ingrained bias. The thing is people (including software engineers) solve tough problems constantly, have to choose which details to focus on, rely on our experiences, and our experience is centered around outselves. Of course racist outcomes and stereotypes are natural, but we can identify the likely harmful outcomes and work to counter them.

Wouldn’t good driverless cars use radars or lidars or whatever? Seems like the biggest issue here is that darker skin tones are harder for cameras to see

I think many driverless car companies insist on only using cameras. I guess lidars/radars are expensive.

Worse than humans?!

I find that very hard to believe.

We consider it the cost of doing business, but self-driving cars have an obscenely low bar to surpass us in terms of safety. The biggest hurdle it has to climb is accounting for irrational human drivers and other irrational humans diving into traffic that even the rare decent human driver can’t always account for.

American human drivers kill more people than 10 9/11s worth of people every year. Id rather modernizing and automating our roadways would be a moonshot national endeavor, but we don’t do that here anymore, so we complain when the incompetent, narcissistic asshole who claimed the project for private profit turned out to be an incompetent, narcissistic asshole.

The tech is inevitable, there are no physics or computational power limitations standing in our way to achieve it, we just lack the will to be a society (that means funding stuff together through taxation) and do it.

Let’s just trust another billionaire do it for us and act in the best interests of society though, that’s been working just gangbusters, hasn’t it?

Not necessarily worse than humans, no, just worse than it can detect light skinned and tall people.

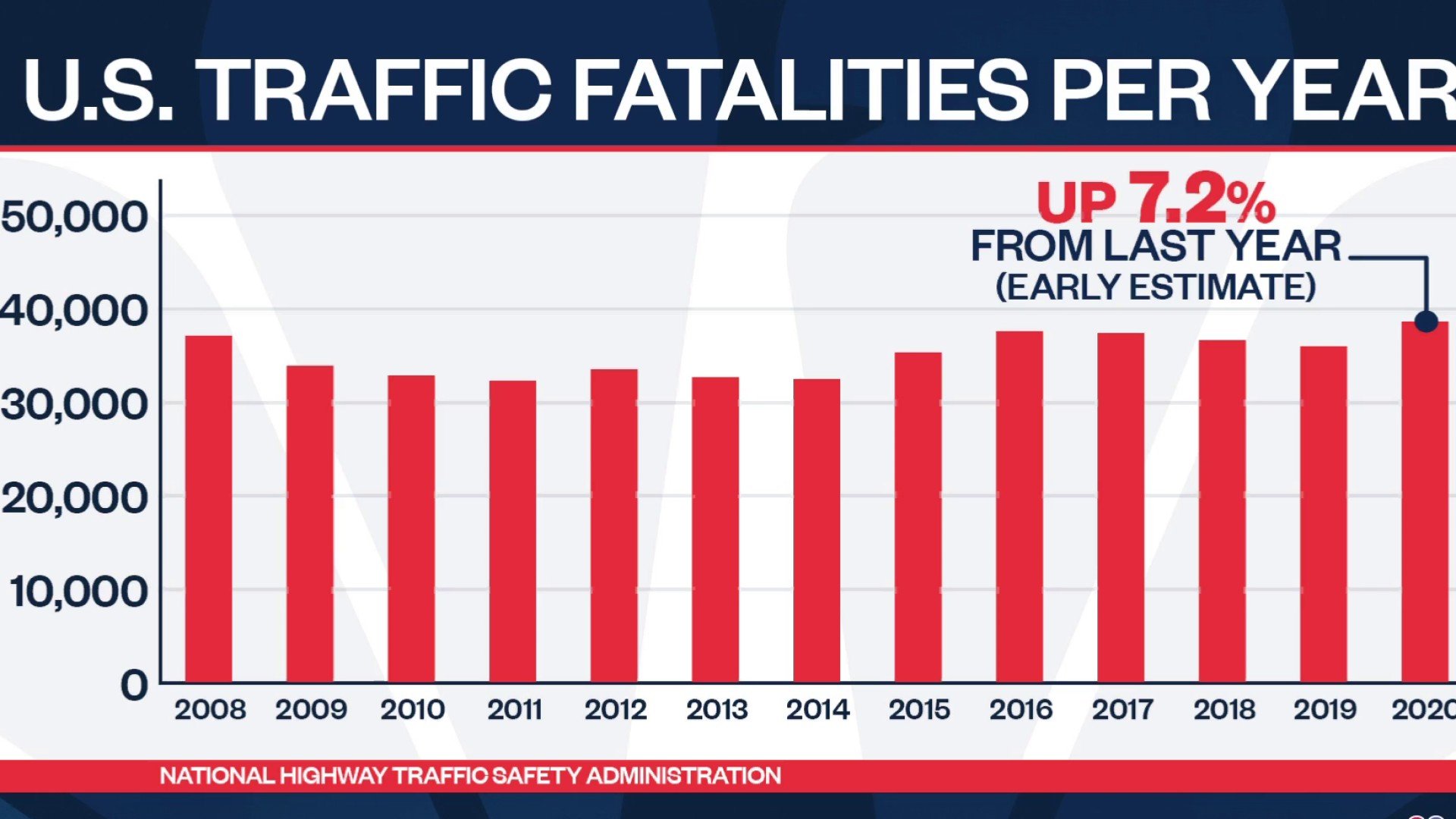

2020 was lockdown year, how on earth have accidents increased in the US?

Any black people or children in your ‘study’?

Self driving cars are republicans?

What? No. They’d need to recognise them better - otherwise how can they swerve to make sure they hit them?

cars should be tested for safety in collisions with children and it should affect their safety rating and taxes. Driverless equipment shouldn’t be allowed on the road until these sorts of issues are resolved.

A single flir camera would help massively. They don’t care about colour or height. Only temperature.

I could make a warm water balloon in the shape of a human and it would stop the car then. Maybe a combination of all various types of technologies? You’d still have to train the model on all various kinds of humans though.

They need Google Pixel cameras.

#blacklivesmatter

Pretty sure Teslas target kids.

It’s almost like less contrast against a black road or smaller targets are computationally more difficult to detect or something! Weird! How about instead of this pretty clear fact, we get outraged and claim it’s racism or something! Yeah!!

Sue the road builders for building racist roads!

/s

If you say the cars are racist you might get the car right to buy into them…