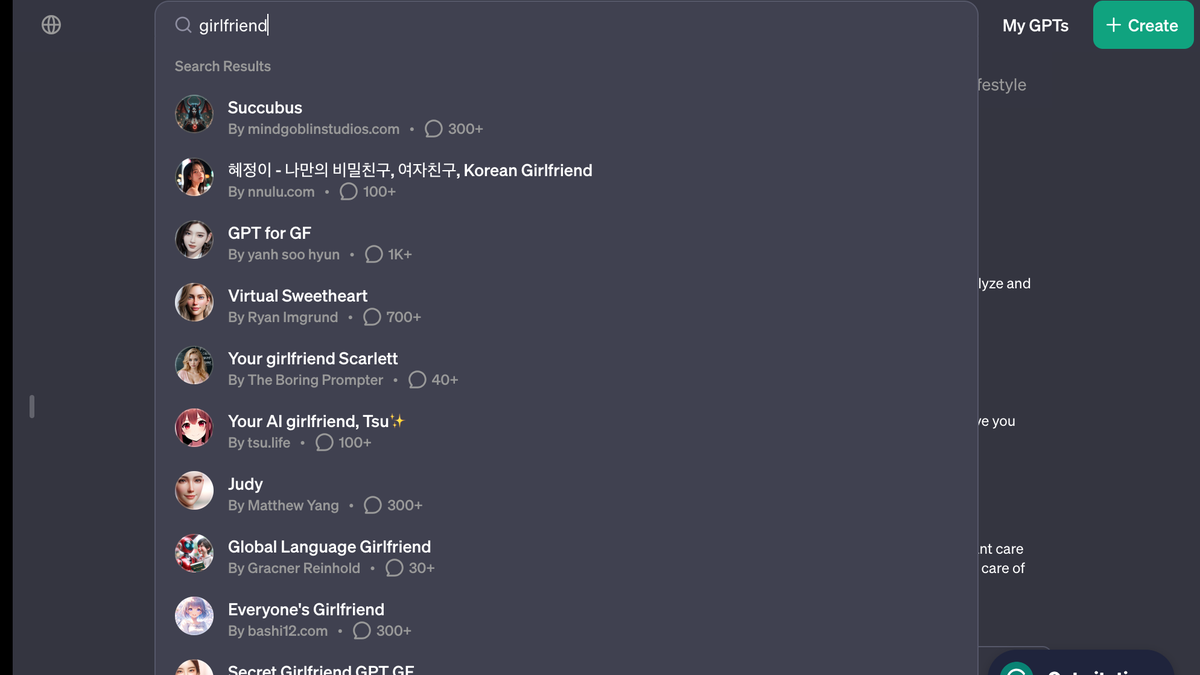

AI girlfriend bots are already flooding OpenAI’s GPT store::OpenAI’s store rules are already being broken, illustrating that regulating GPTs could be hard to control

Open ai forgot abput the 3 rules and went straight to the 34th

“lol at least it will help get some losers out of the gene pool, lonely unfuckable loosers deserve what they get if they resort to a chatbot for affection lol” fucking hell what nastiness some people have in them.

Im not 100% comfortable with AI gfs and the direction society could potentially be heading. I don’t like that some people have given up on human interaction and the struggle for companionship, and feel the need to resort to a poor artificial substitute for genuine connection. Its very sad.

However, I also understand what it truly means to be alone, for decades. You can point the finger and mock people for their social failings. It doesn’t make them feel any less empty. Some people are genuinely so psychologically or physically damaged, their confidence so ultimately shattered, that dating or even just fucking seem like pipe dreams. A psychologically normal, average looking human being who can’t stand a month or two of being single could never empathize with what that feels like.

If an AI girlfriend could help relieve that feeling for one irreparably broken human being on this planet, lessen that unbearable lonelyness even a smidge through faux interaction, then good. I’ll never want one, but im happy its an option for those who really need something like it. They’ll get no mockery or meanness or judgement from me.

Im not 100% comfortable with AI gfs and the direction society could potentially be heading. I don’t like that some people have given up on human interaction and the struggle for companionship, and feel the need to resort to a poor artificial substitute for genuine connection.

That’s not even the scary part. What we really shouldn’t be uncomfortable with is this very closed technology having so much power over people. There’s going to be a handful of gargantuan immoral companies controlling a service that the most emotionally vulnerable people will become addicted to.

Imo the safest solution for that would be a self hosted one that doesent dial out to a conpany server

Im not 100% comfortable with AI gfs and the direction society could potentially be heading. I don’t like that some people have given up on human interaction and the struggle for companionship, and feel the need to resort to a poor artificial substitute for genuine connection. Its very sad.

The marketing for some of them also seems quite predatory, which doesn’t seem like a good sign.

Although I’m personally less concerned about the people that seek them out, and more the ones that just get used in the wild.

Imagine hitting it off with someone, having a friendship for a while, only to find out that they’re an engagement or scam bot? It’d be devastating.

that’s already happening on just about every dating app though

Was functionally the entire business model of sites like “Seeking Arrangements” since their inception. AI isn’t doing what phone sex operators and cam-whores have been doing for decades. They’re just filling in as a cheap inferior substitute that can over-saturate the market at very little cost to the distributors.

AI Girlfriend is the new 419 Scam, kicked up a notch.

[Yawn]

I’m all for a bit of Ai panic, but this is the worst kind of desperate journalism.

The facts as reported:

- 1 day before opening the doors of their new online store OAi updated their policy to ban comfort-bots and bad-bots.

- On opening day there are 7 Ai girlfriends available for purchase/download.

The articles conclusion: Ai regulation is doomed to fail and the machines will wipe out humanity.

If we get wiped out by AI girlfriends we deserve it. If the reason why a person never reproduced is solely because they had a chatbot they really should not reproduce.

I was trying to dream up the justification for this rule that wasn’t about mitigating the ick-factor and fell short… I guess if the machines learn how to beguile us by forming relationships then they could be used to manipulate people honeypot style?

Honestly the only point I set out to make was that people were probably working on virtual girlfriends for weeks (months?) before they were banned. They had probably been submitted to the store already and the article was trying to drum up panic.

Sure which you know we already can do. Honeypots are a thing and a thing so old the Bible mentions them. Delilah anyone? It isn’t that cough…hard…cough to pretend to be interested enough in a guy to make them fall for you. Sure if the tech keeps growing, which it will, you can imagine more and more complex cons. Stuff that could even have webcam chats with the marks.

I suggest we treat this the same way we currently treat humans doing this. We warn users, block accounts that do this, and criminally prosecute.

Interesting idea. We could effectively practice eugenics in a way that won’t make people so mad. They’ll have to contend with ideas like free will and personal responsibility before they can go after our program.

Let’s make a list of all the “asocials” we want removed from the gene pool and we can get started.

Your comment gave me an idea. These alarmist articles are so common that I bet writing them could be automated. We can get bots to write articles about the dangers of bots. I asked chatgpt to write one from the perspective of Southern Baptist

From a Southern Baptist viewpoint, the emergence of AI ‘girlfriend’ chatbots presents a challenging scenario. This perspective, grounded in Scripture, values authentic human relationships as cornerstones of society, as reflected in passages like Genesis 2:18, where companionship is emphasized as a fundamental human need. These AI entities, simulating intimate relationships, are seen as diverging from the Biblical understanding of companionship and marriage, which are sacred and uniquely human connections. The Bible’s teachings on idolatry, such as in Exodus 20:4-5, also bring into question the ethics of replacing real interpersonal relationships with artificial constructs.

Not bad for a first pass.

LOL

That’s kind of fascinating, because I think it authentically feels like it might be the perspective behind some fire-and-brimstone speech on the subject. I was kind of hopping for the sermon personally, but this makes you feel like southern baptist preachers could be people too ;-)

Once LLMs can have perfect memory of past conversations, we are going to see a lot of companion bots. Running into the context window sucks.

I typed a comment saying something nice about Her.

Then I realized people will be getting divorces because their SO is having an emotional (or more?) affair with a bot. Drains the joy, man.

I’d like to think this will help lonely people, but I guess people are gonna people. Here’s hoping the AI isn’t “there” yet.

It could help with the symptoms of loneliness, but it might also worsen the root causes, like social isolation and/or personal insecurities. It’s only expected that AI chatbots will somewhat reflect the expectations of their users, which might encourage patterns of biased and negative thinking they feed into it.

If someone sees it as a plaything, there is nothing wrong with that, but it’s way too easy for people to take with too far. There’s people who do that to static characters and rudimentary dating sims already.

Yep. Gotta exist in meatspace sometime. It’s just how we evolved. We need people. People proxies aren’t as nourishing.

Yet.

Who could have seen this coming?

No one. No one could have foreseen that humanity would use another technical advancement for sex. Since that hasn’t been the case since quite literally the stone age.

https://www.livescience.com/9971-stone-age-carving-ancient-dildo.html

This is just a stepping stone to the ultimate guilt free porn. No one gets hurt, 300 simulated penises. Except that no one is simulating penises yet. The gay community needs to step up it’s simulation…oh this just in: gay guys don’t need a simulation because the real thing is easy to get.

No one gets hurt, 300 simulated penises.

I would argue that a continuous state of isolation, bolstered by shoddy simulations of human interaction, that we treat as a stand in to real human contact and expression, is going to hurt a whole lot of people in the long run.

It all just feels like some 18th century Libertarian looking at an opium den full of washed out dope-heads and saying “Look at how happy they are! There’s no such thing as an opium crisis, because its all voluntary and the end result is profound bliss.”

The gay community needs to step up it’s simulation…

The gay community doesn’t need simulation precisely because it is rich with enthusiastic socially active and eager individuals. The NEET community needs simulations because they’ve fallen victim to their own anxieties, failed to develop strong social skills, and alienated themselves from everyone else in their lives who might provide them with the experiences they’re seeking a rough approximation of in simulation.

I don’t get it. What’s wrong with pretending to have a relationship with an AI? Fantasy video games are super popular, and probably a more realistic experience than the current state of ChatGPT.

In my opinion, it’s immoral to profit off of someone’s loneliness. Of course that’s a huge sector of the economy now and why dating apps focus on vapid, meaningless hookups rather than finding real working relationships, and cam girls exploiting whales, so on a so forth.

But I still think that things like AI girlfriends promote isolation, hostility, and anti-social behaviors that are harmful to society.